Model Context Protocol, done right.

We help teams design, ship, and scale MCP servers and clients—so your AI apps can talk to tools, data, and workflows cleanly and securely.

Based in Metro Detroit, serving clients nationwide.

Build reliable MCP servers

Type-safe tooling, sensible auth, solid logging. We turn real systems—databases, file stores, APIs—into clean MCP resources & tools.

Connect to your LLM stack

OpenAI Responses/Agents, Anthropic clients, and more. One server, many consumers—without one-off glue code.

Enterprise patterns

RBAC, PHI/PII handling, audit trails, rate limits, and on‑prem connectivity. Practical, production-first defaults.

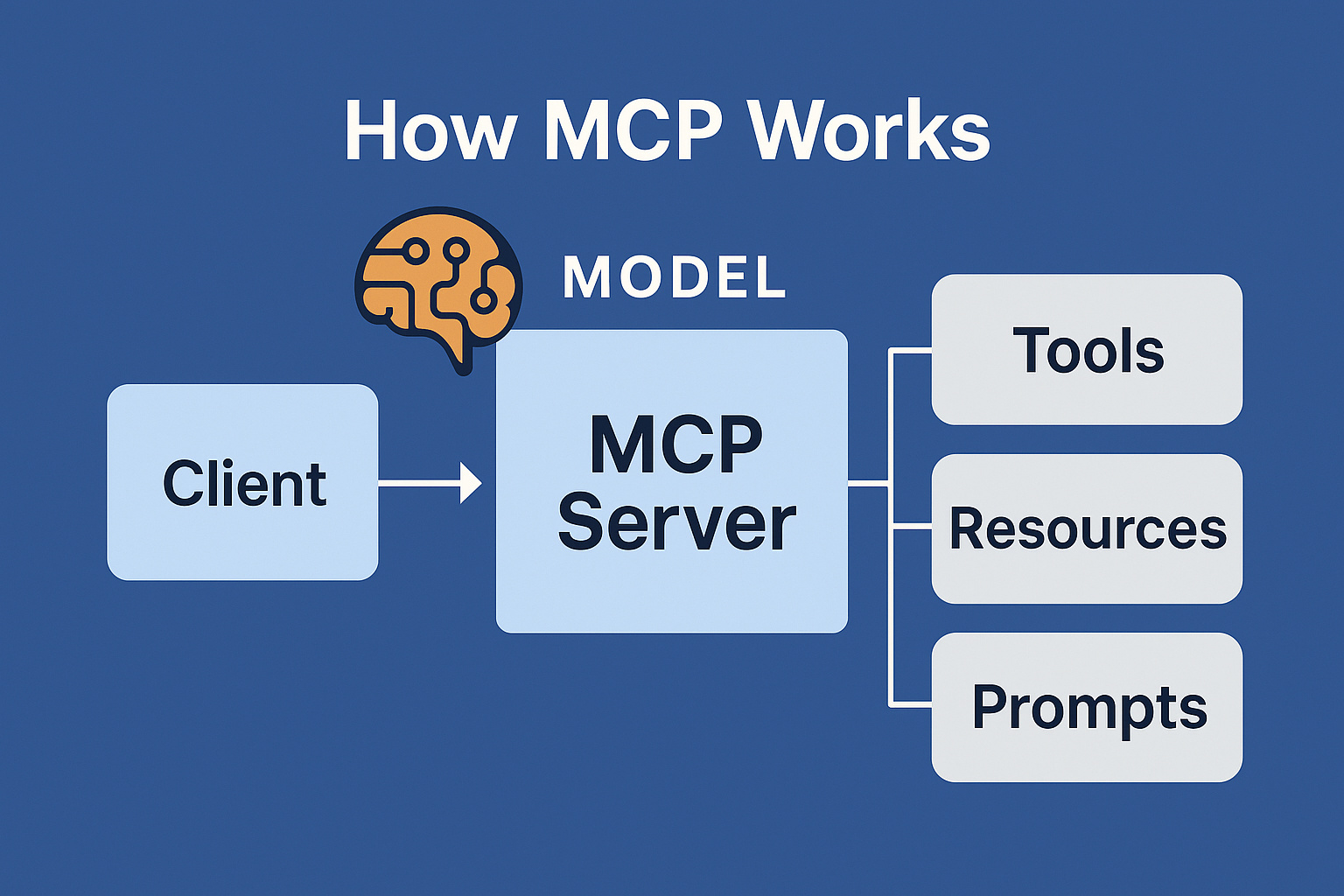

What is MCP (in plain English)?

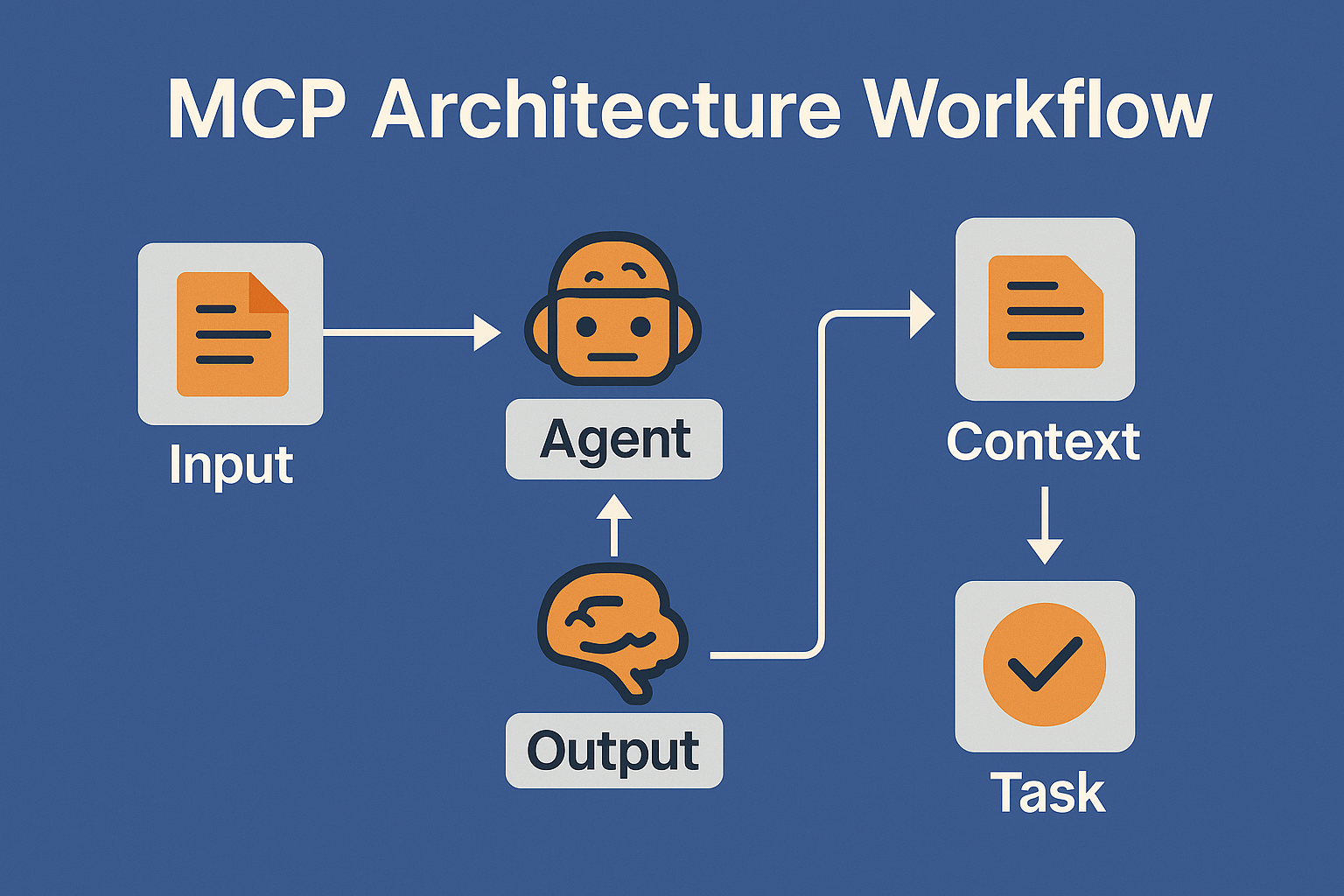

MCP standardizes how AI apps talk to external things—files, databases, APIs, and actions—via a simple client ↔ server protocol. It’s like giving your model a universal port to plug into your stack.

- Servers expose resources (readable data) and tools (actions).

- Clients (your AI app) request, read, and call them—safely.

- You get interoperability instead of custom adapters for each app.

FAQ: quick answers

No. The goal is interoperability. Build once, connect to multiple clients.

On the server: auth, permissions, and auditing. Keep secrets close to your systems.